Reversible Image Processing for Color Images with Flexible Control

Abstract

1. Introduction

2. Related Works

2.1. Contrast Enhancement Methods for Grayscale Images

2.2. Contrast Enhancement Methods for Color Images

2.3. Contrast Enhancement and Saturation Improvement Method for Color Images

3. Proposed Method

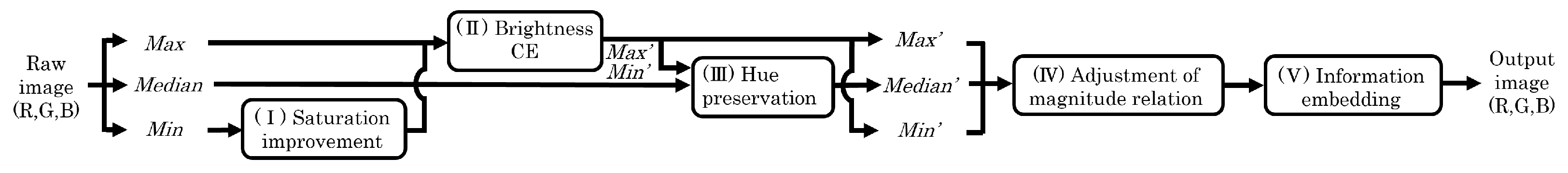

3.1. Image Processing

3.1.1. Sharpening and Smoothing

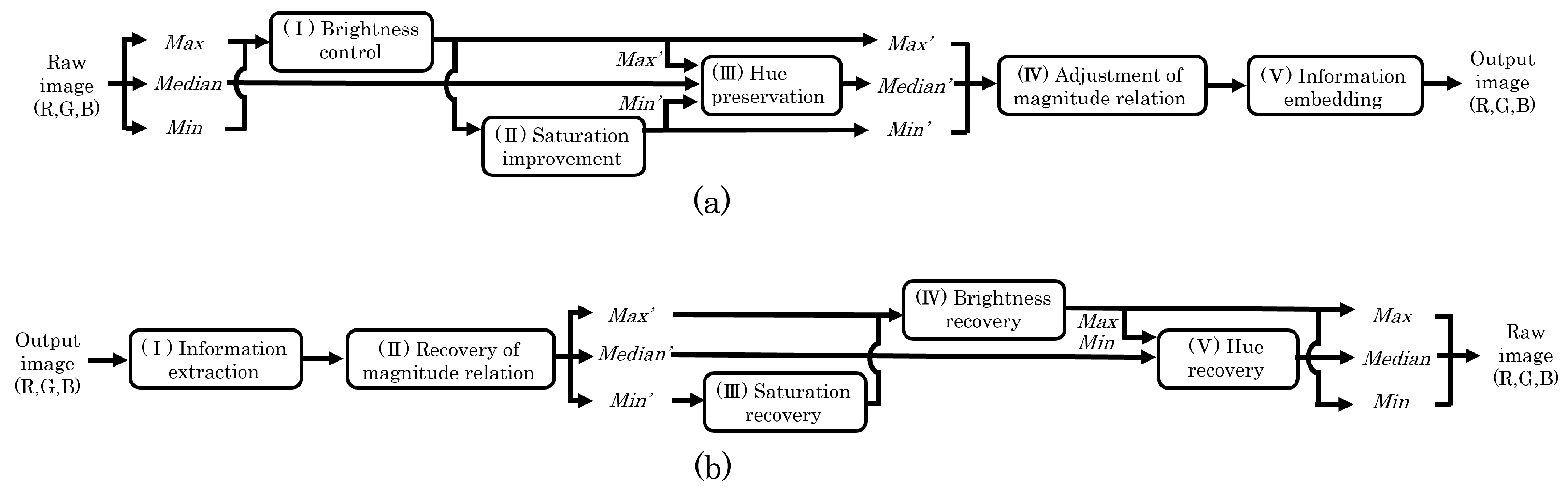

- Step 1:

- Divide the values into the target and reference regions.

- Step 2:

- Given an image with a size of , and denote the original and output target points, respectively. , , , and represent the four reference points, where and .

- Step 3:

- Obtain with the following equation on the basis of Equation (3):This step prevents saturation distortion.

3.1.2. Brightness Increase or Decrease

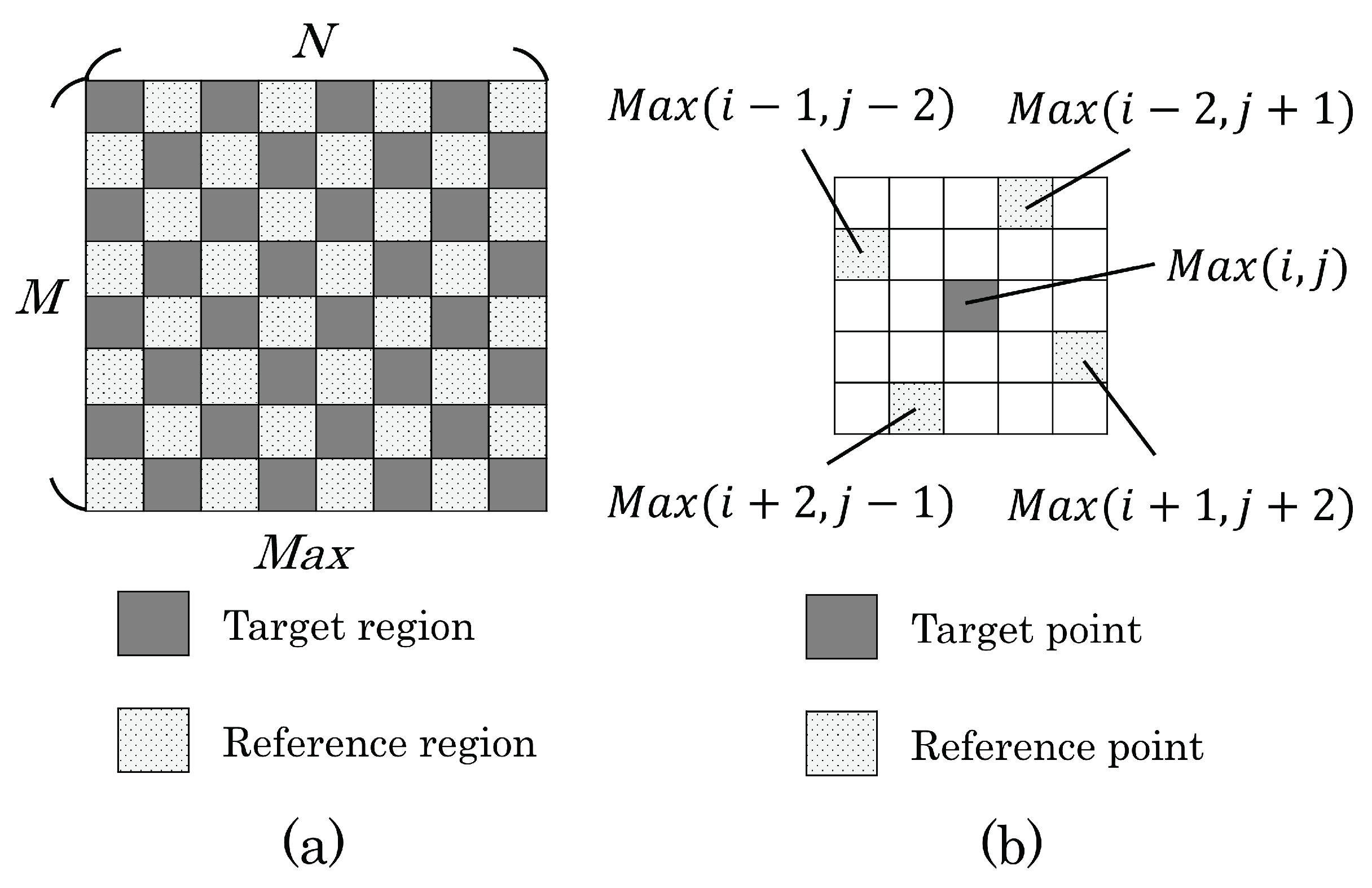

- Step 1:

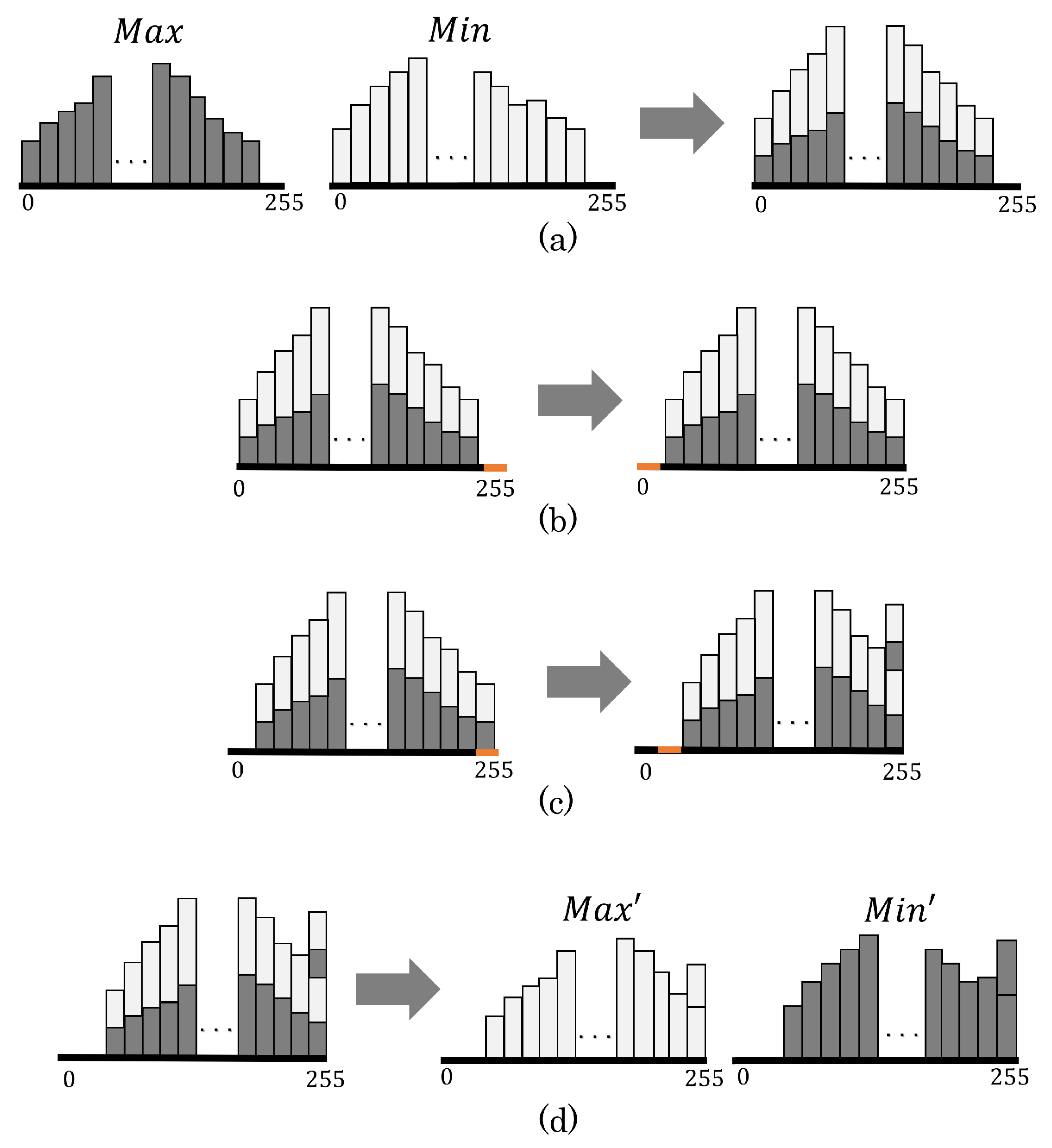

- Integrate the histograms of and (see Figure 4a).

- Step 2:

- Define the rightmost bin of the histogram as the reference bin. In the case where the number of pixels belonging to the reference bin exceeds 1% of the total number of pixels, the left adjacent bin is alternatively defined as the reference bin.

- Step 3:

- Step 4:

- Separate the integrated histogram into the and ones (see Figure 4d).

3.1.3. Recovery Information

- (i)

- For Sharpening and SmoothingAs described in Section 3.1.1, the sharpening and smoothing functions cause rounding errors in the and vlaues. These errors prevent us from restoring the raw images. Thus, we need to record the rounding errors as recovery information. If a large number of rounding errors arises, then the amount of recovery information may exceed the hiding capacity. Two location maps are prepared for and to store the pixels with rounding errors. Our method compresses the maps and error values according to the JBIG2 standard [20] and Huffman coding, respectively. The above data are required for every single process. Additionally, the parameter values of or should be stored for reversibility.

- (ii)

- For Brightness Increase or DecreaseThe bin data before image processing (hereafter the intact bin data) need to be stored. The intact bin data mainly consist of three types of data. The first is the eight-bit pixel value of the reference bin in Step 2. The second is the one-bit classification data for distinguishing whether the reference bin is empty or not in Step 3. In the case where the reference bin is not empty, extra one-bit data are essential for each integrated pixel to discriminate the original bin, namely the reference or adjacent bin. The above data are required for every single process.

3.2. Raw Image Recovery

3.2.1. Recovery from Sharpening and Smoothing

- Step 1:

- Divide into target and reference regions, similar to with in Step 1 of Section 3.1.1.

- Step 2:

- Step 3:

3.2.2. Recovery from Brightness Increase or Decrease

- Step 1:

- Integrate the histograms of and .

- Step 2:

- Obtain the reference bin value and the classification data, which indicate whether the reference bin was empty or not before the increasing process, from the recovery information.

- Step 3:

- Shift the pixels belonging to the bins between the reference and leftmost ones by −1. In the case where the reference bin was not empty before the increasing process, the pixels, which have been integrated in the left adjacent bin, are turned back to the reference bin by referring to the recovery information. Accordingly, the original values of and are recovered.

- Step 4:

- Separate the integrated histogram into and histograms.

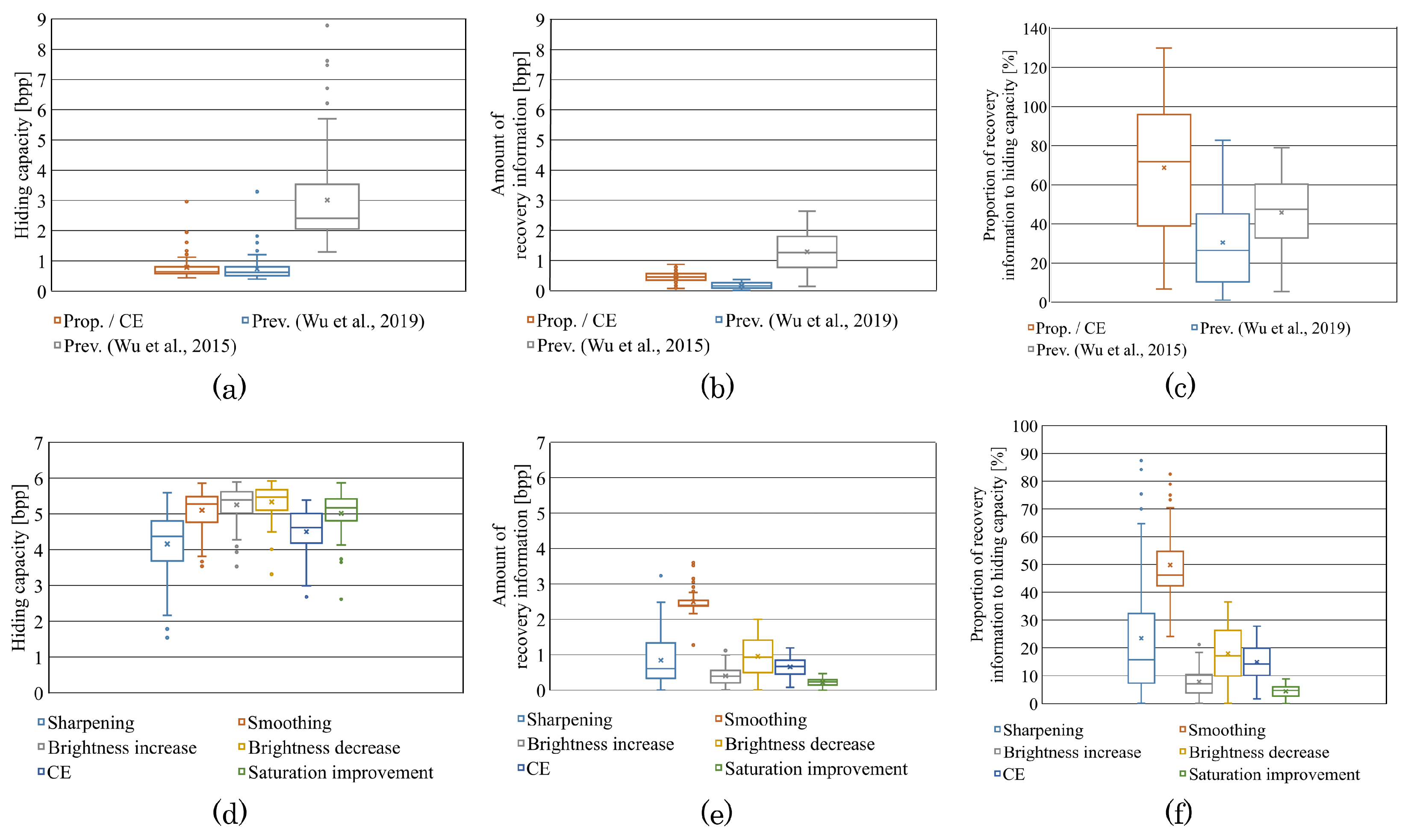

4. Experimental Results

4.1. Evaluation Indices

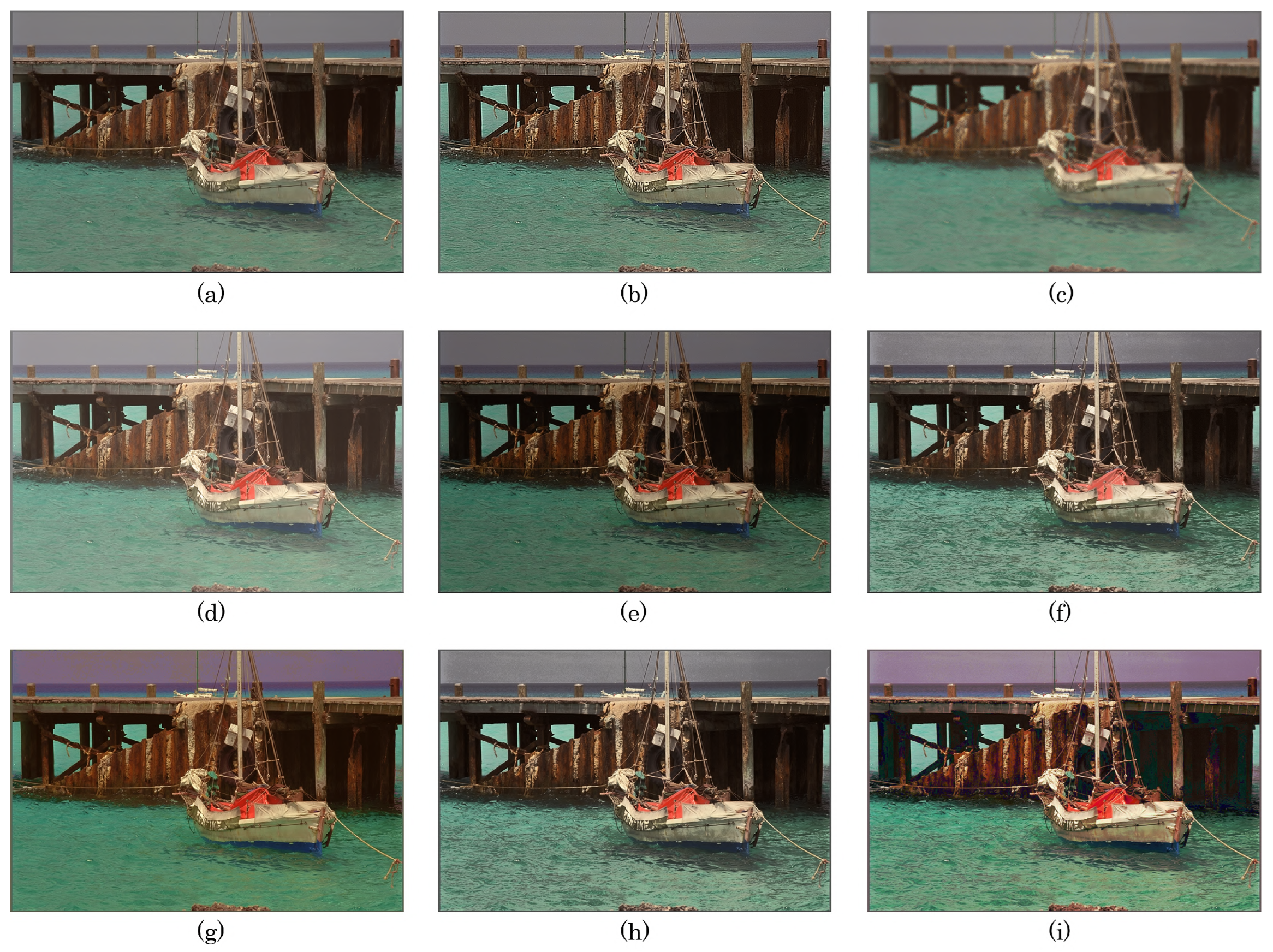

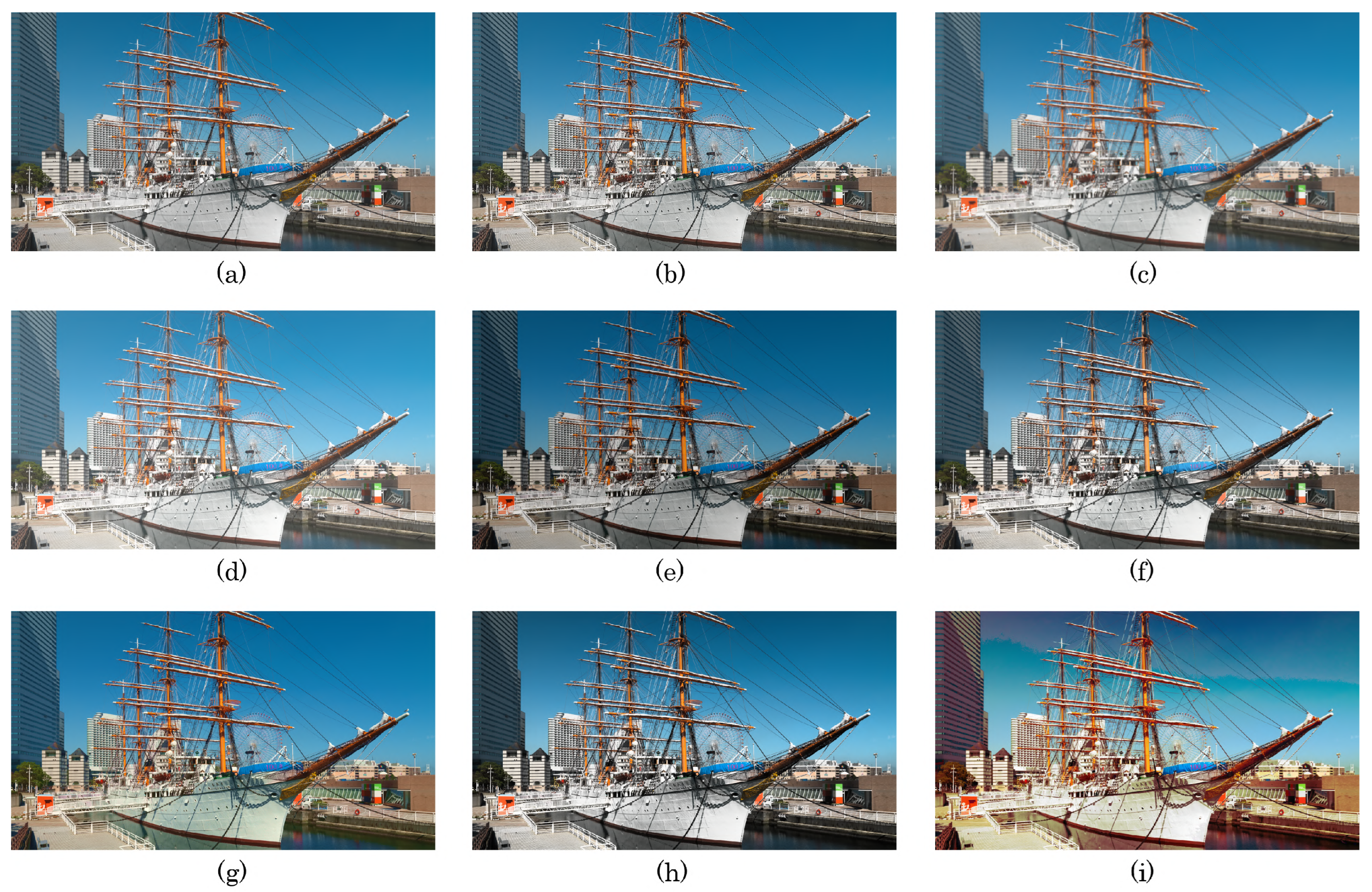

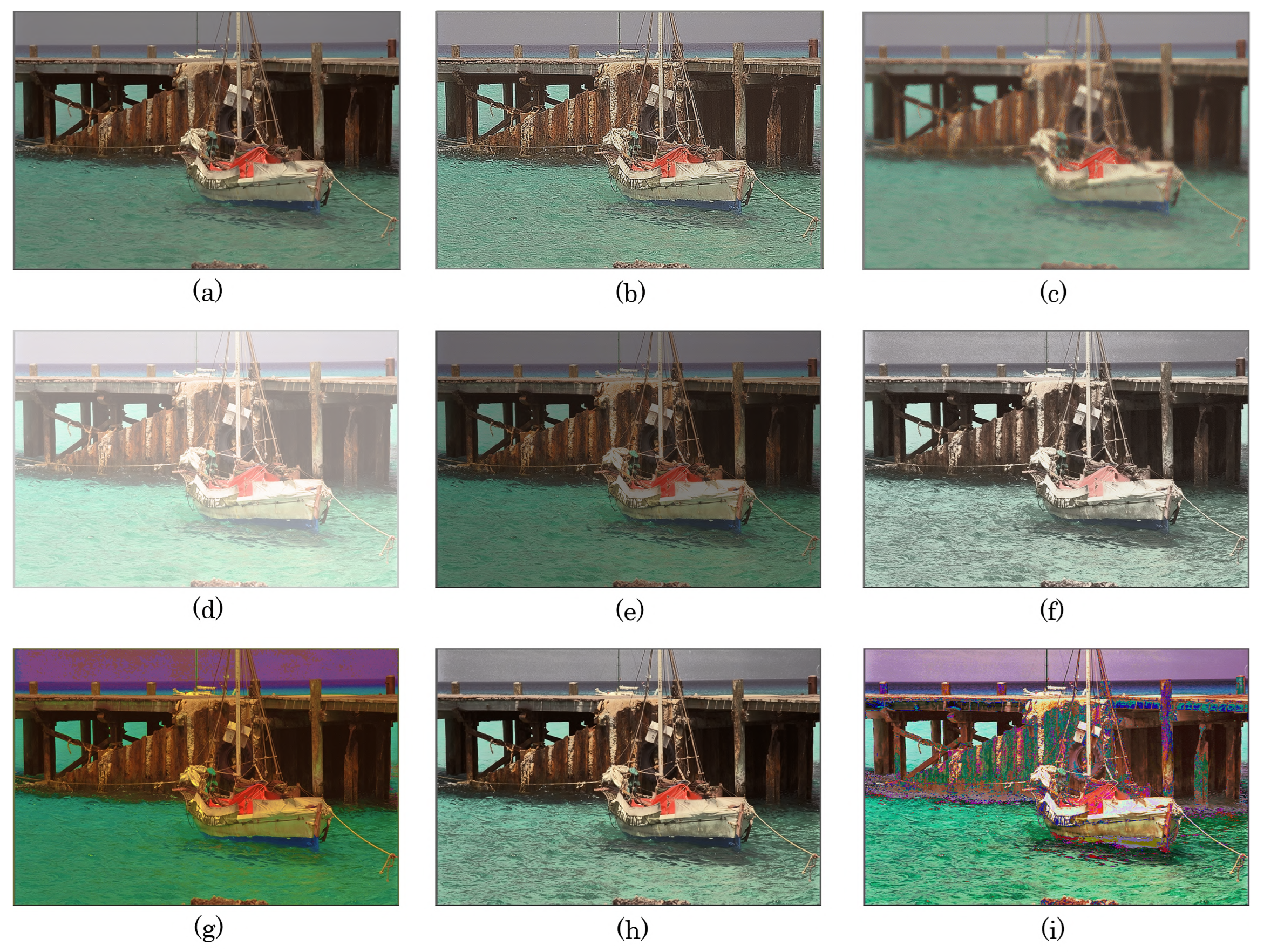

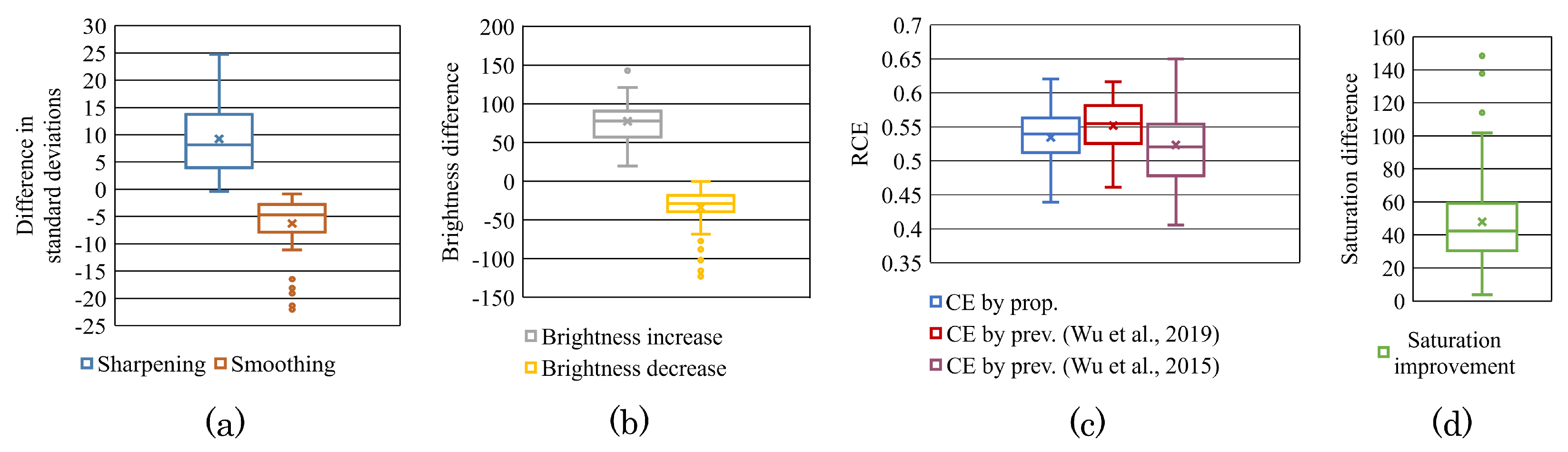

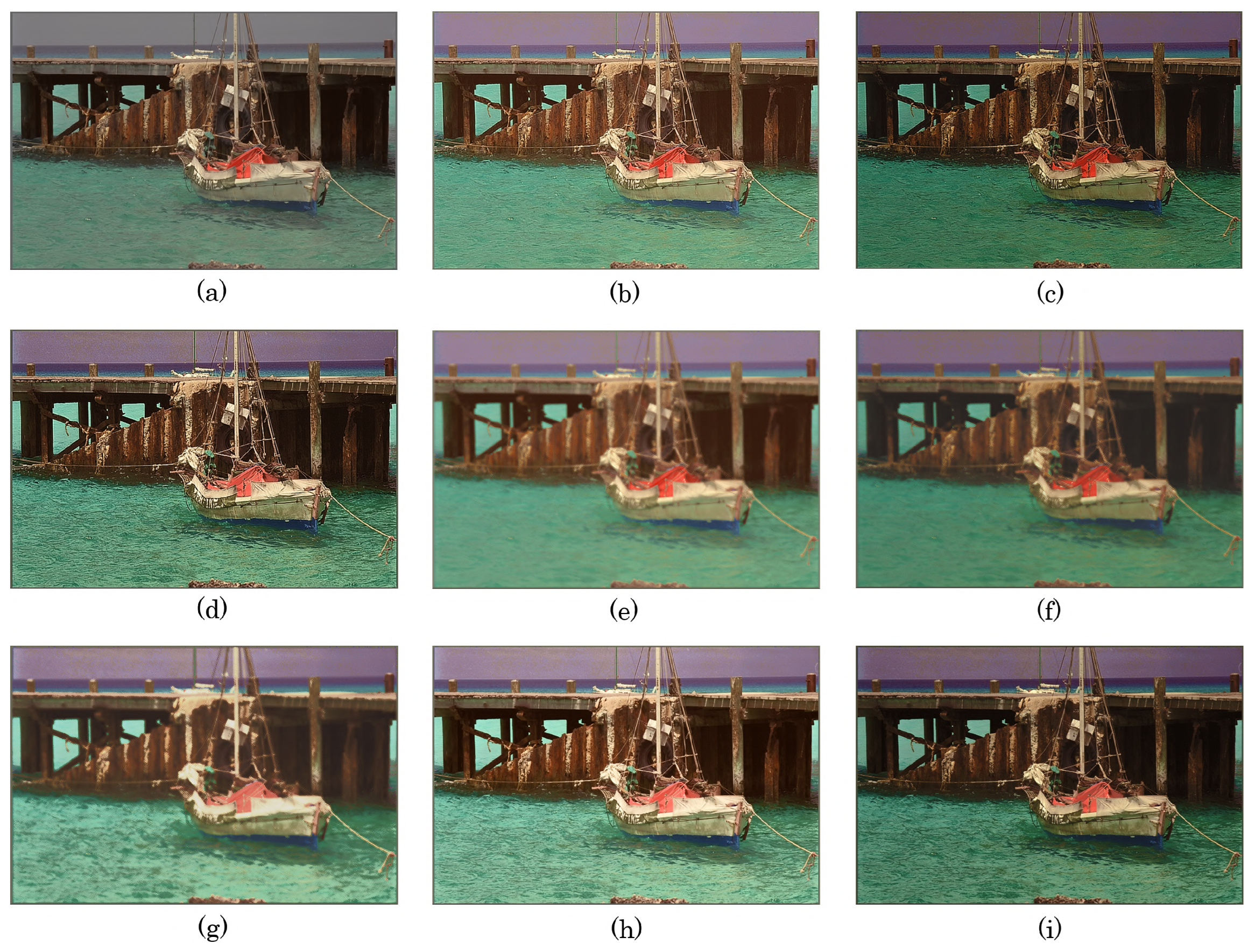

4.2. Visual and Quantitative Evaluation

4.3. Maximum Level of Each Process

4.4. Reversibility

4.5. Effectiveness of Embedding Process

4.6. Discussion on Coregulation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, H.-T.; Dugelay, J.-L.; Shi, Y.-Q. Reversible image data hiding with contrast enhancement. IEEE Signal Process. Lett. 2015, 22, 81–85. [Google Scholar]

- Jafar, I.F.; Darabkh, K.A.; Saifan, R.R. SARDH: A novel sharpening-aware reversible data hiding algorithm. J. Vis. Commun. Image Represent. 2016, 39, 239–252. [Google Scholar]

- Zhang, T.; Hou, T.; Weng, S.; Zou, F.; Zhang, H.; Chang, C.-C. Adaptive reversible data hiding with contrast enhancement based on multi-histogram modification. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5041–5054. [Google Scholar]

- Wu, H.-T.; Tang, S.; Huang, J.; Shi, Y.-Q. A novel reversible data hiding method with image contrast enhancement. Signal Process. Image Commun. 2018, 62, 64–73. [Google Scholar] [CrossRef]

- Wu, H.-T.; Mai, W.; Meng, S.; Cheung, Y.-M.; Tang, S. Reversible data hiding with image contrast enhancement based on two-dimensional histogram modification. IEEE Access 2019, 7, 83332–83342. [Google Scholar]

- Wu, H.-T.; Huang, J.; Shi, Y.-Q. A reversible data hiding method with contrast enhancement for medical images. J. Vis. Commun. Image Represent. 2015, 31, 146–153. [Google Scholar] [CrossRef]

- Gao, G.; Wan, X.; Yao, S.; Cui, Z.; Zhou, C.; Sun, X. Reversible data hiding with contrast enhancement and tamper localization for medical images. Inf. Sci. 2017, 385–386, 250–265. [Google Scholar]

- Yang, Y.; Zhang, W.; Liang, D.; Yu, N. A ROI-based high capacity reversible data hiding scheme with contrast enhancement for medical images. Multimed. Tools Appl. 2018, 77, 18043–18065. [Google Scholar] [CrossRef]

- Kim, S.; Lussi, R.; Qu, X.; Kim, H.J. Automatic contrast enhancement using reversible data hiding. In Proceedings of the IEEE International Workshop on Information Forensics and Security, Rome, Italy, 16–19 November 2015; pp. 1–5. [Google Scholar]

- Kim, S.; Lussi, R.; Qu, X.; Huang, F.; Kim, H.J. Reversible data hiding with automatic brightness preserving contrast enhancement. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 2271–2284. [Google Scholar] [CrossRef]

- Wu, H.-T.; Cao, X.; Jia, R.; Cheung, Y.-M. Reversible data hiding with brightness preserving contrast enhancement by two-dimensional histogram modification. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7605–7617. [Google Scholar] [CrossRef]

- Wu, H.-T.; Wu, Y.; Guan, Z.; Cheung, Y.-M. Lossless Contrast Enhancement of Color Images with Reversible Data Hiding. Entropy 2019, 21, 910. [Google Scholar] [CrossRef]

- Sugimoto, Y.; Imaizumi, S. An Extension of Reversible Image Enhancement Processing for Saturation and Brightness Contrast. J. Imaging 2022, 8, 27. [Google Scholar] [PubMed]

- Kumar, C.; Singh, A.K.; Kumar, P. A recent survey on image watermarking techniques and its application in e-governance. Multimed. Tools Appl. 2018, 77, 3597–3622. [Google Scholar] [CrossRef]

- Shi, Y.-Q.; Li, X.; Zhang, X.; Wu, H.-T.; Ma, B. Reversible data hiding: Advances in the past two decades. IEEE Access 2016, 4, 3210–3237. [Google Scholar] [CrossRef]

- Smith, A.R. Color gamut transform pairs. Comput. Graph. 1978, 12, 12–19. [Google Scholar] [CrossRef]

- Hamachi, T.; Tanabe, H.; Yamawaki, A. Development of a Generic RGB to HSV Hardware. In Proceedings of the 1st International Conference on Industrial Applications Engineering 2013, Fukuoka, Japan, 27–28 March 2013; pp. 169–173. [Google Scholar]

- Zhou, Y.; Chen, Z.; Huang, X. A system-on-chip FPGA design for real-time traffic signal recognition system. In Proceedings of the 2016 IEEE International Symposium on Circuits and Systems (ISCAS), Montreal, QC, Canada, 22–25 May 2016; pp. 1778–1781. [Google Scholar]

- Thodi, D.M.; Rodriguez, J.J. Expansion Embedding Techniques for Reversible Watermarking. IEEE Trans. Image Process. 2007, 16, 721–730. [Google Scholar] [CrossRef] [PubMed]

- Howard, P.G.; Kossentini, F.; Martins, B.; Forchhammer, S.; Rucklidge, W.J. The emerging JBIG2 standard. IEEE Trans. Circuits Syst. Video Technol. 1998, 8, 838–848. [Google Scholar]

- Kodak Lossless True Color Image Suite. Available online: http://www.r0k.us/graphics/kodak/ (accessed on 14 October 2022).

- The USC-SIPI Image Database. Available online: https://sipi.usc.edu/database/ (accessed on 14 October 2022).

- McMaster Dataset. Available online: https://www4.comp.polyu.edu.hk/~cslzhang/CDM_Dataset.htm (accessed on 14 October 2022).

- IHC Evaluation Resources. Available online: https://www.ieice.org/iss/emm/ihc/ (accessed on 14 October 2022).

- ITE Evaluation Resources. Available online: https://www.ite.or.jp/content/chart/uhdtv/ (accessed on 14 October 2022).

- Gao, M.Z.; Wu, Z.G.; Wang, L. Comprehensive evaluation for HE based contrast enhancement techniques. Adv. Intell. Syst. Appl. 2013, 2, 331–338. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. Color Res. 2005, 30, 21–30. [Google Scholar]

| Database | # of Images | Image Size |

|---|---|---|

| Kodak [21] | 24 | 768 × 512 |

| SIPI [22] | 6 | 512 × 512 |

| McMaster [23] | 18 | 500 × 500 |

| IHC [24] | 6 | 4608 × 3456 |

| ITE [25] | 10 | 1920 × 1080 (converted from bit depth of 48 to 24) |

| Brightness | Saturation | Hue | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Difference in Standard Deviations | Difference | RCE | Difference | Absolute Difference (degree) | |||||||

| Saturation Improvement | |||||||||||

| = 0 | = 20 | = 20 | = 20 | = 0 | = 20 | = 0 | = 20 | = 0 | = 20 | ||

| Sharpening | 1 | 1.94 | 1.69 | 2.08 | 3.21 | 0.5076 | 0.5066 | 1.43 | 16.08 | 2.71 | 2.12 |

| 2 | 3.52 | 3.12 | 4.44 | 6.07 | 0.5138 | 0.5123 | 0.53 | 14.86 | 4.28 | 3.87 | |

| Smoothing | 1 | −1.55 | −1.96 | 3.06 | 5.57 | 0.4939 | 0.4923 | 1.68 | 16.87 | 3.66 | 2.80 |

| 2 | −4.04 | −4.71 | 6.83 | 12.50 | 0.4842 | 0.4815 | 1.42 | 16.99 | 6.19 | 5.34 | |

| Brightness increase | = 15 | −1.05 | −1.22 | 15.44 | 15.71 | 0.4959 | 0.4952 | 1.51 | 20.75 | 2.38 | 1.12 |

| = 30 | −2.61 | −2.59 | 29.04 | 29.20 | 0.4898 | 0.4898 | 0.78 | 20.79 | 2.64 | 1.14 | |

| Brightness decrease | = 15 | −0.80 | −0.84 | −12.29 | −11.91 | 0.4968 | 0.4967 | 0.06 | 12.02 | 2.86 | 2.28 |

| = 30 | −2.57 | −2.57 | −24.44 | −23.82 | 0.4899 | 0.4899 | −3.18 | 6.64 | 3.61 | 3.16 | |

| CE | = 15 | 4.56 | 4.21 | 1.63 | 2.12 | 0.5179 | 0.5165 | −1.26 | 14.32 | 2.43 | 1.39 |

| = 30 | 8.39 | 7.99 | 3.27 | 3.56 | 0.5329 | 0.5313 | −6.47 | 10.46 | 3.10 | 1.47 | |

| CE by prev. [12] | = 15 | 5.64 | - | −1.10 | - | 0.5221 | - | −0.46 | - | 0.76 | - |

| = 30 | 10.14 | - | −0.55 | - | 0.5397 | - | −0.24 | - | 0.87 | - | |

| CE by prev. [1] | = 15 | 4.88 | - | 3.23 | - | 0.5191 | - | 0.59 | - | 16.72 | - |

| = 30 | 7.27 | - | 9.40 | - | 0.5285 | - | 3.76 | - | 30.56 | - | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sugimoto, Y.; Imaizumi, S. Reversible Image Processing for Color Images with Flexible Control. Appl. Sci. 2023, 13, 2297. https://doi.org/10.3390/app13042297

Sugimoto Y, Imaizumi S. Reversible Image Processing for Color Images with Flexible Control. Applied Sciences. 2023; 13(4):2297. https://doi.org/10.3390/app13042297

Chicago/Turabian StyleSugimoto, Yuki, and Shoko Imaizumi. 2023. "Reversible Image Processing for Color Images with Flexible Control" Applied Sciences 13, no. 4: 2297. https://doi.org/10.3390/app13042297

APA StyleSugimoto, Y., & Imaizumi, S. (2023). Reversible Image Processing for Color Images with Flexible Control. Applied Sciences, 13(4), 2297. https://doi.org/10.3390/app13042297